This weekend I added a particle system framework to Transcendence Engine (my own 3D games and simulations engine which I intend on using for future 3D projects). I already had a sprite / billboard class in the engine, and so one would think that implementing particles would be as simple as creating many of these and controlling their behaviour at run-time based on various parameters read in from some data file.

However, this would have been a very inefficient solution because it would mean that each particle is rendered in a separate draw call. Once we reach a few hundred particles we would incur significant driver overhead and our graphics engine would quickly become CPU limited, preventing us from leveraging the power of the GPU.

The solution instead was to create a dynamic mesh class, which has a dynamic vertex buffer (but static index buffer), allowing us to modify the vertex data on the fly. In this way, the entire particle system is treated as a single object and is rendered using a single draw call instead of potentially many hundreds of draw calls.

I spent a fair bit of time on designing this part of the system, because having a well-designed dynamic mesh class is hugely advantageous to other areas of the engine as well. For example, my ocean surfaces are implemented as flat, well-tessellated grids whose vertices are deformed in the vertex program based on the summation of sine waves. This looks pretty decent by older standards, but does not hold up against some of the uber-realistic water shaders we see in demos and cutting edge games today. Using sine waves is limiting -- ideally you want perlin noise. This is doable in vertex shaders if you use vertex texture sampling (and I do intend to explore this route). However, performing the vertex deformation in the application itself rather than in the shaders is advantageous because (a) vertex texture sampling is not always available, and (b) your application has full knowledge of what is happening in the scene and can thus have the water surface respond to other stimuli (for example, barrels or crates being dropped into the water). In short, a dynamic mesh class has many uses beyond being used only for particle systems, and so some careful design and planning was warranted.

Having implemented and tested my dynamic mesh class I was ready to move onto using it to implement a particle system class. This part was pretty straight-forward. I've worked on this sort of thing at both Digital Extremes and ThoroughTec, and have also worked with Ogre's particle systems a lot; most particle system classes tend to expose a similar set of parameters.

Mine is at a fairly early stage and does not support anything too fancy. Currently the parameters that control particle system behaviour are:

- particle life time

- particle fade in time

- particle fade out time

- initial particle dimensions

- scale rate

- emission frequency

- number of texture divisions

- initial velocity in object space

- acceleration in object space

- acceleration in world space

I chose to have explicit fade in and fade out times, assuming that particles will always start off fully transparent and fade in, and will also always fade out again at the end (any other behaviour always tends to look jarring). My scale rate is additive rather than multiplicative because I prefer a stable, constant change in size rather than having particles shrink drastically the smaller they are, or quickly balloon out when they become large. Most particle systems I've worked with expose an emission rate rather than an emission frequency (for example, emit 10 particles per second rather than emit a particle every 0.1 seconds). I decided that an emission frequency is a little more intuitive, perhaps not to us programmer types, but to artists. For example, a particle emission rate of 0.5 means emit a particle every two seconds, but may confuse anybody who is not mathematically inclined (does it mean we emit half a particle every second?). I'm not trying to insult the intelligence of the intended users here -- I just feel that if there is an alternative that is clearer and less likely to cause confusion, that is the better option.

The last point is the velocity and acceleration. Ordinarily you would just need a single acceleration value in world space to allow for forces like gravity and wind. This would allow, for example, smoke or dust to sail across the scene as if being carried by the wind, or water emitted from a fountain to eventually slow and fall back to the ground.

However, I chose to add an additional parameter here, differentiating between acceleration in object space and in world space. Having an acceleration that works in object space (by this I mean that it works relative to the position and orientation of the emitter) allows for interesting particle behaviours that work as intended even when you rotate or move the particle system as a whole. For example, I have used it for an explosion effect where the particles, after rushing outward for a while, suddenly reverse direction and rush back inward, giving an imploding effect instead. Not particularly realistic, but great for fantasy or sci-fi games.

Another important part of implementing the particle system framework was integrating it into Transcendence Engine's resource system. Transcendence supports hot-loading, which in our case means either that (a) if the relevant option is enabled, if you modify a resource the engine picks it up immediately and the results are instantly visible in-game, without you having to re-start, or (b) if the relevant option is not enabled (for performance reasons) you simply need to instruct the engine to check for resource changes by selecting an option in a menu or hitting a hotkey. It is very gratifying to tweak a few textures, re-size a mesh, alter a line in a shader, then alt-tab back to the engine (which was running the whole time), hitting F11 and watching all of the changes instantly take effect.

It seemed to me that tweaking particle parameters was another situation where instant feedback would be very advantageous, and so from the start I planned it so that it would fit into Transcendence's resource framework. The particle system itself is not regarded as a resource (since multiple instances of the same type may exist). Instead, I provide a separate class called a ParticleSystemTemplate, which inherits from the Resource class, provides its own resource handler, and thus gets all of this behaviour for free. Each time a particle system is created, it asks the particles resource handler for a pointer to the particle system template associated with the given file name. If we modify that file while the engine is running, the changes are detected and the resource handler instructs the particle system template to re-load itself. The particle system class, in its update function, detects that a dirty flag has been set on the particle system template, and it then re-constructs itself.

While developing the particle system framework I was made aware of some short-comings in my graphics object class hierarchy. The intention of this class hierarchy is to automatically allow new graphics objects types to be created and inserted into this hierarchy, and have them automatically support frustum culling, casting and receiving shadows, having their transform matrices handled, having their materials applied and having their shader parameters updated. A good few hours of re-factoring were required to make the system work as intended, but in the end it turned out well. Particle systems are currently culled using axis-aligned bounding boxes (which are updated on the fly). I do not currently intend to provide support for individual particle culling (I do not believe it would provide performance benefits). As for casting and receiving shadows, this is currently untested, but in theory, once the shaders are correctly setup, it should just work.

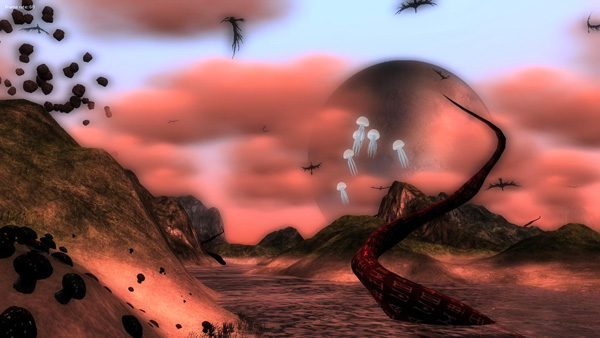

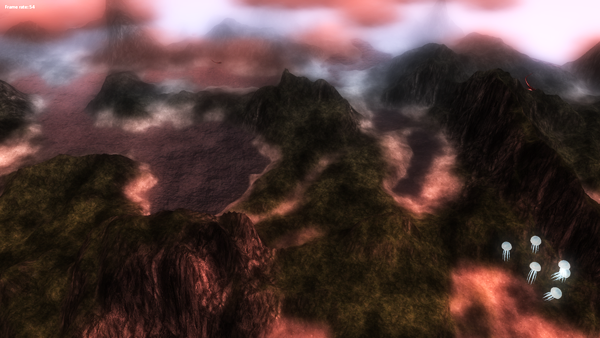

At this point the technical work for my particle systems is close to complete. The end result is a particle system that is efficient (fewer draw calls, fits into the culling framework) and which supports hot-loading.

In future I intend to implement soft particles in Transcendence. Soft particles is a technique that improves the visual appeal of particle systems by having them become transparent at points where they intersect other surfaces. For example, without support for soft particles, smoke rising through a vent on the ground would not look quite right, because the hard, straight line where the particle intersects the geometry representing the vent would be very obvious and jarring. Soft particles work by having the pixel shader sample the scene's depth buffer so it can compare its own depth to the existing scene depth. If the two results are very similar the pixel is made transparent. The end result is that particle's no longer have that unrealistic hard-edged appearance when intersecting other scene geometry. Another great benefit of soft particle support is that other surfaces (most notably water) can be rendered in the soft particle layer. I implemented soft particles in ThoroughTec's in-house engine a few months ago. Although it works really well for dust and smoke, the most noticeable visual improvement was to our water, which now has a soft transition as it meets the shore. The terrain for our current project has an extensive network of rivers, as well as oceans and lakes. The lack of hard edges makes it look significantly more realistic and has improved the overall look of the terrain.

There is a moderate amount of work required before Transcendence Engine is ready for this, but it should be pretty straight-forward to repeat the process.

.jpg)

.png)

.jpg)

.png)

.png)

.png)