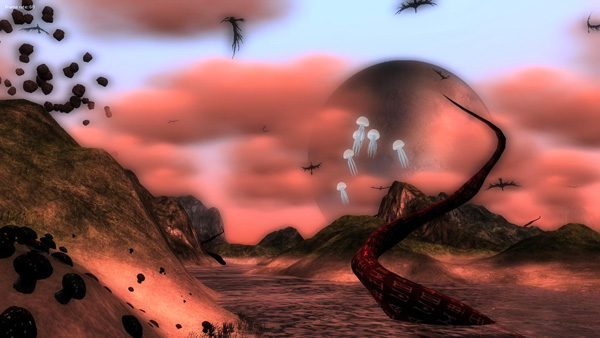

In this post I discuss my implementation of path-finding in the real-time strategy game I'm currently building (the working title for the game is Zyrtuul, though I might end up changing that).

A commonly used path-finding algorithm in game development is the A* algorithm (pronounced 'A star') which is an extension of the older well-known graph traversal algorithm called Dijkstra's Algorithm. I coded up a C++ implementation of various path-finding algorithms back in 2005 (as part of one of the demonstration applications that accompanied my CompSci master's degree), so I chose to re-use my old implementation of A* rather than re-inventing the wheel.

I started off coding the game as well as the game engine in C++ because, at the time, my main interest was in developing the underlying technology. But as it progressed I started wanting to focus on the actual game rather than the technology. Coding the engine and game simultaneously was simply taking too long (and I wasn't really learning anything new, having done so much engine work over the years already). So I decided to switch to the Unity engine for the game.

Unity doesn't support C++, instead you write scripts in C# or Javascript (edit: apparently Boo and their own language called UnityScript are also supported). I am therefore now coding in C# (simply because I have more experience using C# than the others).

Converting the code from C++ to C# was initially pretty straight-forward until I realized that C# does not provide a priority queue (a fundamental component of the path-finding algorithm) as part of its library. With my initial C++ implementation I was using the STL priority queue, but I now realized that I would have to find an implementation online or else write my own.

A priority queue is a data structure that keeps its elements sorted based on some user-specified criterion, in our case based on the path node cost / distance when conducting a path-finding query. In short, a priority queue is the most efficient way of performing path queries because of the fact that it automatically keeps itself sorted when you add or remove items.

It seems that the Microsoft C# team decided that a priority queue was too niche to be included in the .NET foundation library, so I was out of luck. Fortunately others had encountered this problem as well and so I was able to find a priority queue C# implementation online that, with some minor modification, suited my needs. Once the priority queue was done the rest of the graph traversal / path-finding code came together nicely. So I ended up getting a task that would ordinarily take a significant amount of time and effort done in about a day due to code re-use.

Path-finding solved the macro-navigation problem (navigation on a large scale), but micro-navigation was still a problem due to the fact that the pathing grid has limited resolution and also due to the fact that the grid is not static -- moving entities can invalidate previously valid routes. A vehicle could easily determine that to get from one side of the map to the other it needed to move around a lake or large obstacle that was blocking its way, but it still had issues on a smaller scale. For example, if vehicle A was told to find a path to some point and then vehicle B was instructed to move to a location that blocks this path, vehicle A would eventually encounter vehicle B and need to determine how to deal with it.

For this game I could get away with very simple collision detection and collision response based on bounding spheres and having vehicles 'pushed away' from one another when they are colliding. This partially solved the problem of vehicles coming into contact with one another (they would simply push one another out of the way), but was far from perfect as it could lead to 'jostling'. Also, it just made them look downright impolite. If two vehicles were headed directly toward one another, they would simply push head to head with neither able to get past. Alternatively, if you told many vehicles to move to a single location they would all mass together, jostling and pushing one another in an attempt to settle at that location.

One attempted solution was a "frustration factor"... when a vehicle was too close to another vehicle it had a frustration factor value that represented how 'frustrated' the vehicle was, with the idea being that it would eventually decide to simply find a new path to its destination it it became annoyed enough. This was simply a floating point value that increased over time when the vehicle was too close to another vehicle, and decreased over time when it wasn't. If a vehicle's frustration factor rose beyond a certain threshold it would request a new route to its current destination. Unfortunately I spent far too much time trying to get this to give the desired results and I found it to be too unpredictable. Eventually I decided that the moment a vehicle encounters another vehicle, one of the two vehicles must immediately find a new path. I arbitrarily decided that in the case of path blocking conditions the unit that was created first gets to keep its current path and the more recently created unit has to find a new path.

Quite a few other rules are also in place to handle various conditions and edge cases, but I think I've already written too much and so won't go discuss these here. The navigation behaviour of the individual units is fairly solid at this point, but not quite perfect (it's close though, better than some commercial RTS games I've seen). However, it will suffice for now -- I can hone it further later on, when polishing the game up.

Quite a few other rules are also in place to handle various conditions and edge cases, but I think I've already written too much and so won't go discuss these here. The navigation behaviour of the individual units is fairly solid at this point, but not quite perfect (it's close though, better than some commercial RTS games I've seen). However, it will suffice for now -- I can hone it further later on, when polishing the game up.

.png)

.png)

.png)

.png)

.png)

.jpg)

.png)

.jpg)

.png)

.png)

.png)